What Is an AI Operating System

Date: Feb 14, 2026

BrainFrame is an AI Operating System—but what exactly does that mean?

Today, the term “Operating System” is overloaded. Does it mean another version of Linux or Windows? Something like Android or iOS? Or something entirely different?

This article clarifies what we mean by an AI Operating System (AI OS)—and why it represents a fundamental shift in AI computing.

Rethinking the Meaning of “Operating System”

There is no single fixed definition of an operating system. However, most people would agree that an OS has two defining characteristics:

- It provides common applications that users rely on every day—without requiring them to understand the underlying technical complexity.

- It offers APIs and abstractions that allow developers to easily build new applications on top of it.

In the early days of personal computing, macOS and Windows popularized the Graphical User Interface (GUI)—mouse, cursor, keyboard, windows. GUI became synonymous with what an OS was.

In the mobile era, touchscreens, cameras, GPS, and connectivity reshaped the experience. The mouse became largely irrelevant. Traditional “windows” disappeared. Scrollbars vanished. What mattered most became the application ecosystem—calling, messaging, browsers, and app stores.

From a user perspective, every successful OS solves two key problems:

- Provide essential daily-use applications from day one.

- Provide intuitive APIs that abstract complexity and enable large-scale developer innovation.

The AI Adoption Problem

AI today faces the same adoption barrier.

While AI can theoretically do millions of things, its power remains locked behind layers of hardware, infrastructure, and algorithmic complexity.

Since 2023, chat has become the most widely adopted AI interface. But beyond chat, building AI systems requires assembling:

- Hardware accelerators (CPU, GPU, NPU)

- Media processing pipelines

- Model orchestration

- Deployment systems

- Data pipelines

- Scenario-specific model tuning

For most developers, building AI applications beyond chat remains expensive and technically demanding. So AI computing faces the same two questions every OS must answer:

- What are the common, daily-use applications that AI provides?

- What abstractions and APIs enable scalable AI application development?

The Core AI Challenge: Models Are Application-Aware

This is the defining challenge of AI: models are inherently application-aware. Traditional OS engineering focused on abstracting hardware so that applications could sit ‘on top’ without the OS needing to understand their logic. However, if we are to integrate AI inference into the OS itself—rather than treating it as a disconnected application—this separation breaks. A vision model designed to detect defects on a manufacturing line must be trained with data from that specific environment. Because the model’s intelligence is tied to its context, an AI OS cannot simply abstract hardware; it must provide an abstraction for application-aware intelligence.

This creates a new systems challenge:

- Models must be customizable.

- Algorithms must be swappable.

- Inference must adapt to context.

- Media pipelines must align with the use case.

- Deployment must remain manageable for non-experts.

In other words: An AI OS cannot simply abstract hardware. It must abstract application-aware intelligence.

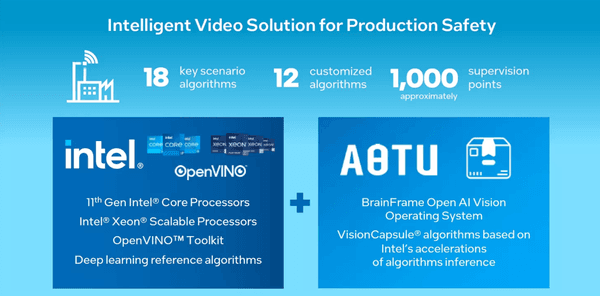

This is precisely the problem BrainFrame | VisionCapsule is designed to solve—by modularizing AI capabilities into deployable, composable, scenario-adaptable building blocks.

AI Complexity Is More Than Acceleration

At first glance, AI might appear to be just another layer added to Linux or Android—mainly inference acceleration through CPUs, GPUs, or NPUs. But AI fundamentally reshapes the stack.

AI systems process:

- Video

- Images

- Audio

- Text

- Speech

- Multimodal streams

This impacts:

- Codecs

- Media pipelines

- Databases

- Real-time scheduling

- Network communication

- Storage, memory, and nearly every other system component.

And because models are application-aware, the OS must coordinate media processing, inference scheduling, and algorithm selection in a unified architecture.

Thanks to decades of progress in Linux, containers like Docker, and orchestration systems such as Kubernetes, we do not need to start from scratch. But we do need a new operating layer purpose-built for AI workloads and application-aware intelligence.

What Are the Common Applications of an AI OS?

Text-based chat is one daily AI application. People use it for research, content generation, and productivity.

However, most interaction remains confined to the digital world.

Several of my former colleagues at Magic Leap, including Jeremy Leibs and Josh Faust, previously worked on ROS (Robot Operating System) at Willow Garage. ROS enables robots to navigate and interact with the physical world. It continues to evolve as a powerful technology framework. However, it has not become a mass-adopted operating system in the consumer sense, largely because a common, universal daily robot application has not yet been invented.

If Android had launched without calling, email, or a browser, we would not have seen global adoption.

The same principle applies to AI.

The 1.5 Billion Stationary Camera Opportunity

Today, there are over 1.5 billion CCTV cameras deployed worldwide.

Each of these cameras continuously streams video for safety and security.

These cameras cover:

- Residential buildings

- Offices

- Factories

- Transportation hubs

- Retail environments

They observe where daily life happens.

Within this infrastructure lies a massive, underutilized compute and data layer.

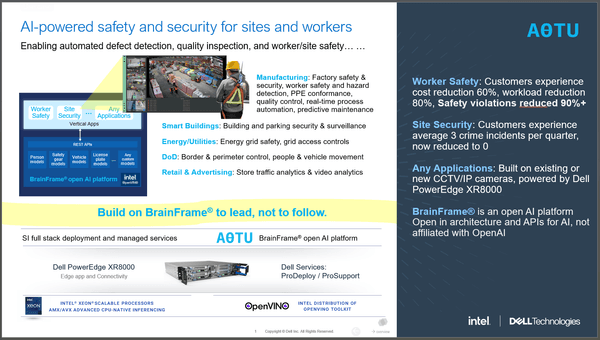

We believe there is a generational opportunity to build an AI OS industry around stationary cameras—starting with safety and security, while enabling developers worldwide to build thousands of AI applications on top of that foundation.

For Regular Users

An AI OS should allow users to deploy AI without hiring an engineering team. For example:

- Connect security cameras in a residential building.

- Configure the system to detect unfamiliar individuals.

- Have the AI speak to visitors.

- Trigger alarms.

- Notify property managers.

All without writing code. AI becomes practical, autonomous, and local.

For Developers

Developers should not need to become machine learning experts to build AI applications. Using modular AI atomic blocks such as:

- Object detection

- Activity classification

- Face recognition

- Anomaly detection

They can build applications that generate:

- Fire alerts

- Safety compliance monitoring

- Intrusion detection

- Industrial automation notifications

BrainFrame abstracts the complexity of media processing, inference acceleration, and application-aware model management—so developers can focus on solving real-world problems.

Beyond Safety and Security Applications

Imagine if every building had a local AI server:

- A thin receptionist device with camera, speaker, and microphone at the front desk.

- A kitchen assistant that guides cooking.

- A local voice interface running entirely at the edge.

- No cloud dependency.

- No privacy concerns.

- A unified and intuitive user experience.

This is the architectural direction of AI computing in the physical world. This is why we created BrainFrame.

APIs and Design for an AI OS

To support application-aware intelligence at scale, an AI OS must abstract three major engineering domains:

1. Media Processing Pipeline

Video, audio, text, speech, and multimodal fusion—combined and optimized for real-time workloads.

2. Inference Acceleration

Dynamic scheduling across CPUs, GPUs, TPUs, and NPUs—adapting to evolving acceleration software and workload demands.

3. Model and Algorithm Orchestration

Supporting:

- Encapsulated algorithms

- Scenario-specific model tuning

- Modular deployment

- Runtime composability

This is where traditional OS design ends—and AI OS design begins.

BrainFrame REST API Design

BrainFrame’s REST APIs are organized into modular control categories:

- Stream Controls — Add IP cameras, USB cameras, video files.

- Alarm Controls — Define user-relevant events.

- Zone Controls — Specify areas and perimeters of interest.

- Inference APIs — Retrieve real-time inference results.

- Alert APIs — Access alarm outputs.

- Capsule Controls — Add or remove algorithms and models.

- Identity Controls — Upload identities for recognition.

- Premises Controls — Manage multi-site deployments.

- System Controls — Users, server configuration, etc..

These APIs are built to manage not just hardware abstraction—but application-aware intelligence abstraction.

Why It Matters Now

The AI Operating System is the next major evolution in computing. It transforms AI from a specialized tool into a practical, deployable infrastructure layer for the real world.

For companies, this means:

- Faster innovation

- Smarter physical-world automation

- A structural advantage in an AI-driven economy

Is this a distant future? No. While a universal AI OS is still evolving, BrainFrame delivers this model today—specifically for vision AI.

You can deploy it to improve safety and security. Or you can build entirely new application categories on top of it. The era of application-aware AI infrastructure has begun. The future of AI everywhere starts here.